Have you ever thought of how LLMs like GPT-4 get so powerful and accurate? It’s not just the vast amount of data they’re trained on; fine-tuning plays a crucial role in refining these models for specific tasks. This approach takes a general model and hones it to excel in particular areas in order to make it smarter and more relevant to your needs. Imagine having an AI that understands language and speaks your industry’s language fluently. That’s the power of fine-tuning. This approach can improve AI-driven initiatives like customer assistance, content creation, and specialized apps. It’s about tailoring the AI to meet your specific goals. Ready to explore how fine-tuning can elevate your AI capabilities? This blog will serve as a helpful resource. It will walk you through each step and provide advice on how to maximize the potential of your AI. Let’s dive in and discover how fine-tuning can transform your projects!

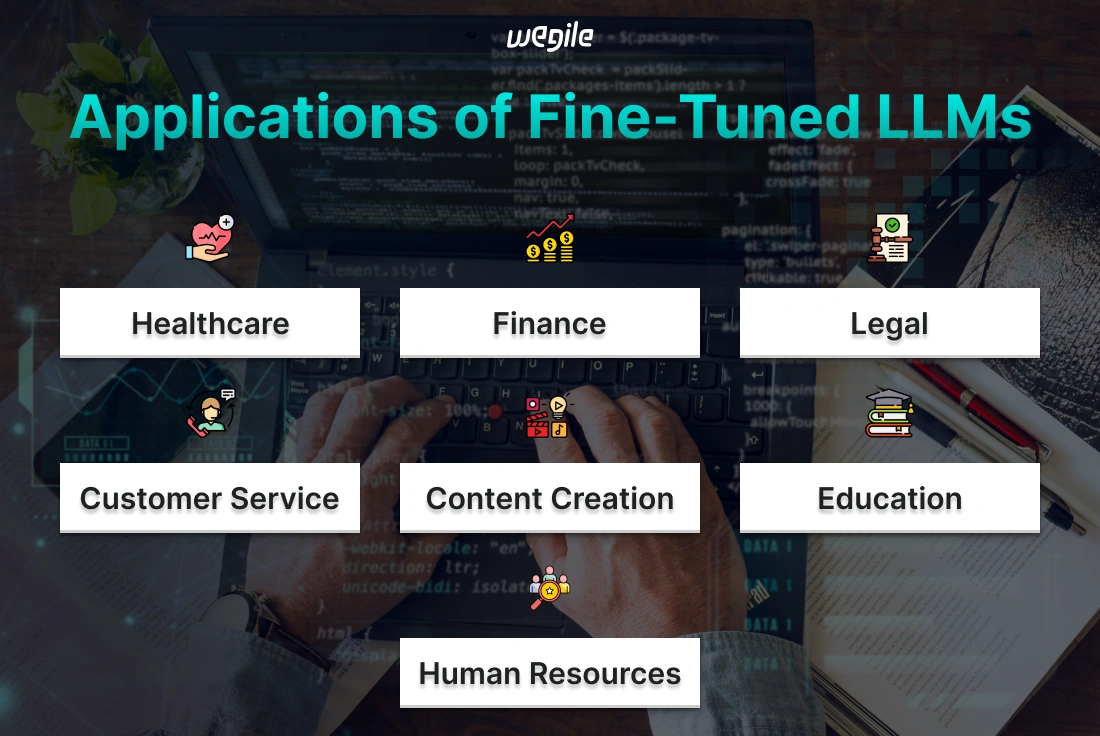

Fine-tuned LLMs are similar to highly skilled specialists; they effortlessly manage complicated jobs. They can adapt to different industries’ needs with ease and are highly efficient at what they do. Let’s explore some practical applications and see how these AI models are making waves across different sectors.

Fine-tuned LLMs are changing the way we approach health care. These models can be trained on vast amounts of medical literature, patient records, and clinical guidelines. Later, the same is used to assist doctors in diagnosing and treating patients more accurately.

Example: Let’s consider an example of a fine-tuned LLM that specializes in rare diseases. A doctor can input a patient’s symptoms. At this point, the model will swiftly go through a mountain of medical data and literature in search of possible diagnoses and therapies. This ensures that patients get the most precise treatment possible while also expediting the diagnosis procedure.

LLMs are being used to automate financial processes that would otherwise require significant human effort. These models help financial institutions and fintech businesses save time and reduce errors, be it analyzing financial documents or generating reports.

Example: A bank might use a fine-tuned LLM to review and process loan applications. The model can evaluate the applicant’s financial history, assess risk, and even generate a summary report for the loan officer. All the process happens within minutes! This speeds up the approval process and also guarantees that the bank’s decisions are based on comprehensive data analysis.

Another field where refined LLMs are having a significant influence is the legal business. These models can be trained via statutes, legal precedents, and case laws. This opens up all sorts of possibilities for its future application in assisting legal professionals with tasks such as research, document creation, and case outcome prediction.

Example: A law firm could use a fine-tuned LLM to draft contracts or legal briefs. The model that’s trained on thousands of similar documents can generate accurate drafts that are tailored to the specific needs of the case. This reduces the time lawyers spend on drafting. The same time can be used for tasks that require more strategy and client interaction.

Fine-tuned LLMs are also transforming customer service. They’re enabling companies to offer instant, accurate, and personalized support. LLM models can be trained on customer inquiries, FAQs, and support documentation to provide quick and relevant responses.

Example: A tech company might deploy a fine-tuned LLM to handle customer inquiries on its website. The model can instantly provide a detailed response in times when a customer asks a question about troubleshooting an issue. This will be based on the company’s support documentation. It can even guide the customer through complex steps and mimic the assistance of a live agent. This enhances customer satisfaction by providing quick resolutions. Moreover, it also frees up human agents to handle more complex or sensitive issues.

Fine-tuned LLMs are becoming indispensable tools in the content creation space. Writers, marketers, and creative professionals take support on these models in order to generate everything from blog posts to marketing copy. This is because LLM models offer tailored inputs that are specific to the audience or brand voice.

Example: A marketing agency could use a fine-tuned LLM to draft social media posts for a new product launch. It can train the model on previous successful campaigns, brand guidelines, and customer preferences and further produce engaging content that resonates with the target audience. This ensures quick input along with consistency and creativity across all platforms.

Education is another field where fine-tuned LLMs are making a significant impact. These models can be tailored to provide personalized learning experiences so that it adapts to the needs and pace of individual students.

Example: An online education platform might use a fine-tuned LLM to create customized lesson plans for students. For that, the model will be used to analyze a student’s past performance and learning preferences. This will lead to the creation of exercises, quizzes, and even explanatory content that aligns with the students’ unique needs. Thus, the use of models will lead to helping students learn more effectively and keep them engaged in their studies.

Fine-tuned LLMs are being used to improve recruitment processes and employee management. These models can analyze resumes, match candidates to job descriptions. It can even assist in performance evaluations.

Example: A company could employ a fine-tuned LLM to sift through hundreds of resumes. This will lead to the identification of the most qualified candidates for the dedication position. The model can highlight relevant experience, skills, and qualifications. This presents HR managers with a shortlist of top candidates. Further, it reduces the time spent on manual resume screening and helps ensure a better fit between candidates and job roles.

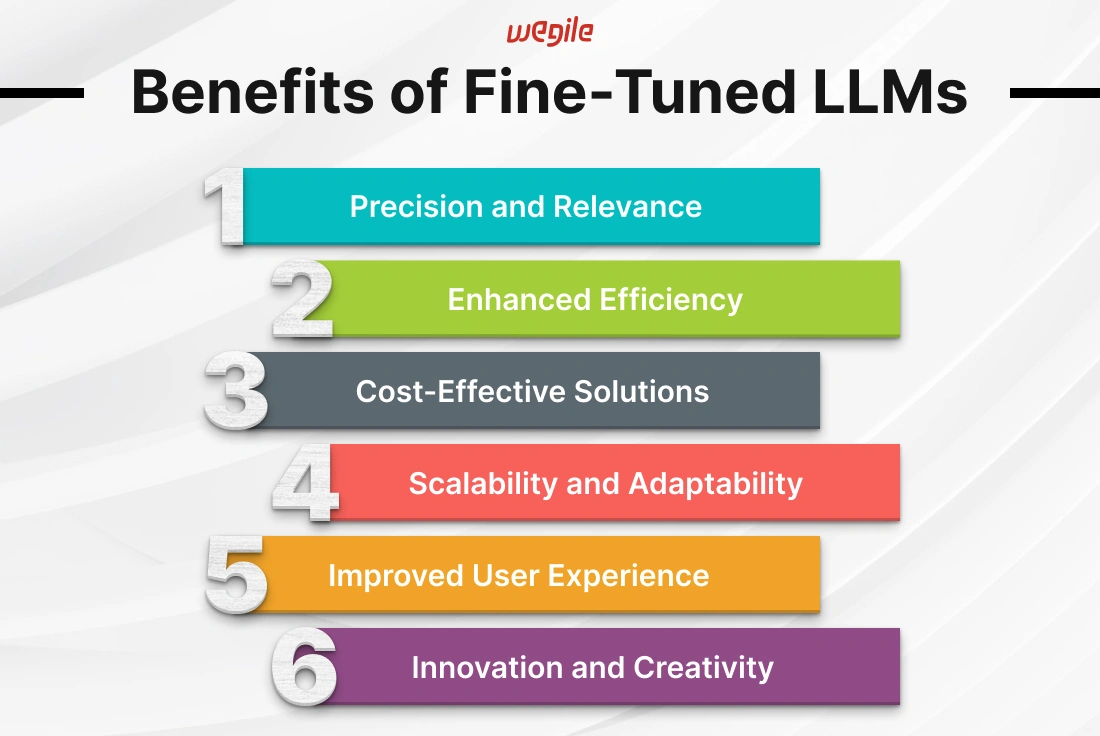

Fine-tuned LLMs are known to offer a range of benefits that make them incredibly valuable across various use cases. Let’s explore how these specialized models can give you an edge.

One of the standout benefits of fine-tuned LLMs is their ability to deliver precise and relevant results. Fine-tuned LLMs are trained to understand the specific nuances of a particular field or task, unlike the generic models that may give you a broad and sometimes vague output.

Why It Matters: This precision makes certain that the model’s outputs apart from being accurate, they’re also directly applicable to the context at hand. Fine-tuned LLM provides insights that are spot-on, especially when you’re drafting a legal document, diagnosing a patient, or analyzing financial data. This saves you time and reduces errors.

Fine-tuned LLMs streamline processes that would otherwise be time-consuming or labor-intensive. They can handle complex tasks quickly and effectively. These models free up human resources for more strategic work.

Why It Matters: You can automate complex tasks such as drafting reports, processing customer inquiries, or screening job applicants. Fine-tuned LLMs can do this and more. This lets you focus on what truly matters: innovating and growing your business.

Using fine-tuned LLMs can result in substantial cost savings because of their exceptional efficiency in automating and optimizing a wide range of tasks. They make it easier to complete tasks and prevent errors that could be expensive.

Why It Matters: Cutting costs without compromising on quality is a remarkable ability. It can be a huge advantage in any industry, and fine-tuned LLMs can help you achieve this by achieving more with less. Thus, the capabilities make these models a smart investment for businesses looking to stay competitive.

Fine-tuned LLMs are powerful apart from being highly adaptable. These models can be re-tuned or scaled to handle new tasks or larger datasets as your needs evolve. This makes them a flexible tool for long-term growth.

Why It Matters: This scalability ensures that your AI solution grows with your business. A fine-tuned LLM can always adapt to meet your needs regardless of whether you’re expanding into new markets, dealing with increasing data volumes, or facing new challenges.

Fine-tuned LLMs can significantly enhance the user experience, especially in areas such as customer service and content creation. They provide faster, more accurate responses and generate content that feels more personalized and relevant.

Why It Matters: Providing a great user experience is something that you can compromise in current times. Fine-tuned LLMs help you deliver the kind of quick, accurate, and personalized interactions that keep customers happy and engaged.

Finally, fine-tuned LLMs open the door to new levels of innovation and creativity. They can generate ideas, suggest new approaches, and even help create original content. This way, they’re pushing the boundaries of what’s possible.

Why It Matters: Having a tool that can think outside the box with you is invaluable regardless of any field. Fine-tuned LLMs are crucial equipment that enhance your current capabilities and further inspire new ways of thinking and working.

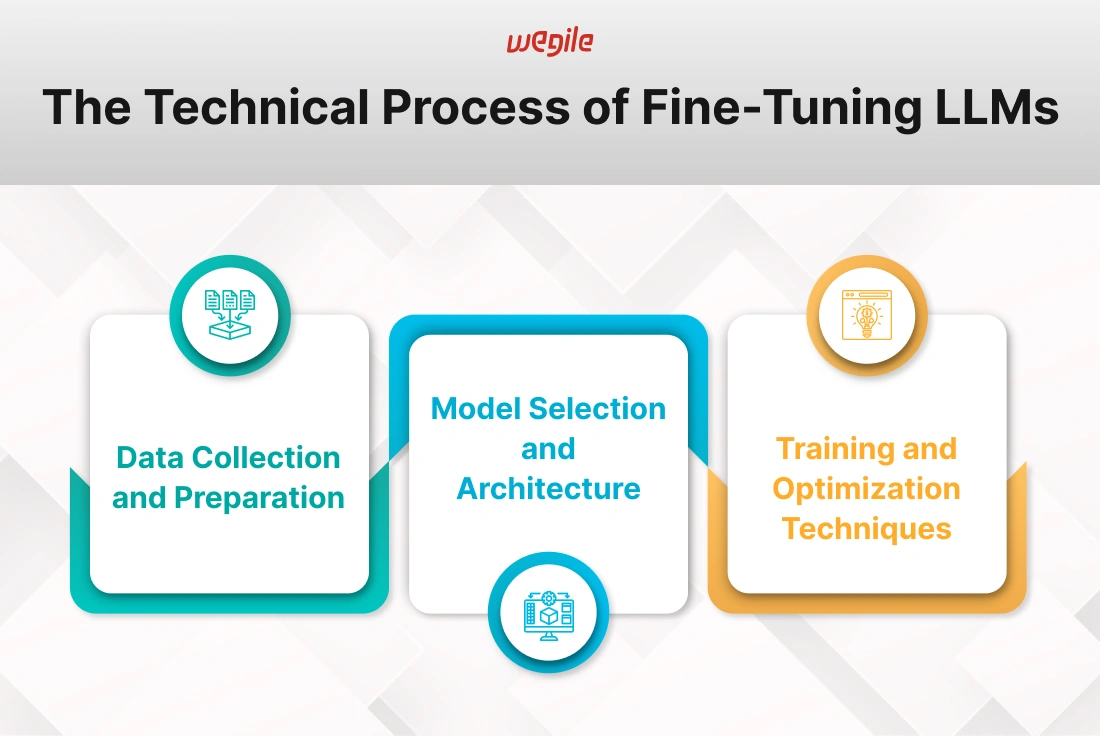

Fine-tuning a Large Language Model (LLM) is like teaching an already smart AI to become an expert in a specific area. This process involves several important steps. Each one is critical to ensure the model performs well for its intended purpose. Let’s break down the key stages in this journey.

The first and perhaps most crucial step in fine-tuning an LLM is gathering and preparing the right data. Imagine trying to teach a chef to specialize in Italian cuisine. You’d want to provide them with authentic recipes, not just general cooking tips. The same goes for AI models.

Gathering Data: Start by collecting datasets that are relevant to the task in which your model wants to excel. For instance, you’ll need a vast array of legal texts, cases, and regulations if you’re fine-tuning an LLM to generate legal documents. The quality and relevance of the data directly impact the effectiveness of the fine-tuning.

Preparing Data: Your collected data needs to be cleaned and structured properly. This includes removing any noise or irrelevant information which makes certain that there is consistency in format. It sometimes even requires labeling data to guide the model’s learning process. High-quality data preparation is key for laying a solid foundation for a building. The final product won’t be stable if the foundation is weak.

Picking the optimal LLM architecture for fine-tuning is like selecting the most suitable tool for a given task. It’s pretty obvious you wouldn’t use a hammer to tighten a screw. The same principle applies here with fine-tuning LLMs.

Model Selection: Different LLMs come with different strengths and sizes. Some are lightweight and quick, while others are heavyweight with vast knowledge but require more computational power. Selecting the appropriate model depends on your specific needs, whether it’s for speed, accuracy, or the ability to handle complex tasks.

Architecture Considerations: The architecture of an LLM includes layers of neurons, attention mechanisms, and parameters that define its capacity and performance. You might adjust these elements to better suit your task when fine-tuning. For instance, you may tweak the number of layers or modify the attention mechanisms to help the model focus better on relevant parts of the input data. Scalability is also a factor. Reassure whether the model can handle an increase in data or task complexity as your project grows or not. Balancing these aspects makes certain that the fine-tuned model is both effective and efficient.

Training and optimizing the model is the next stage after obtaining the correct data and model. Picture this as the part of the process where the AI gets plenty of experience until it becomes an expert.

Training Techniques: During fine-tuning, the LLM is exposed to the prepared dataset, learning to generate responses or perform tasks based on this new information. Techniques like supervised learning or unsupervised learning can be employed. It’s a bit like guiding a student through practice problems. They gradually get better with each iteration.

Optimization Strategies: Optimization is about perfecting the fine-tuning process for better efficiency. This includes adjusting learning rates, using regularization techniques to prevent overfitting, and employing strategies like early stopping. These techniques ensure that the model learns effectively and generalizes well to new and unseen data.

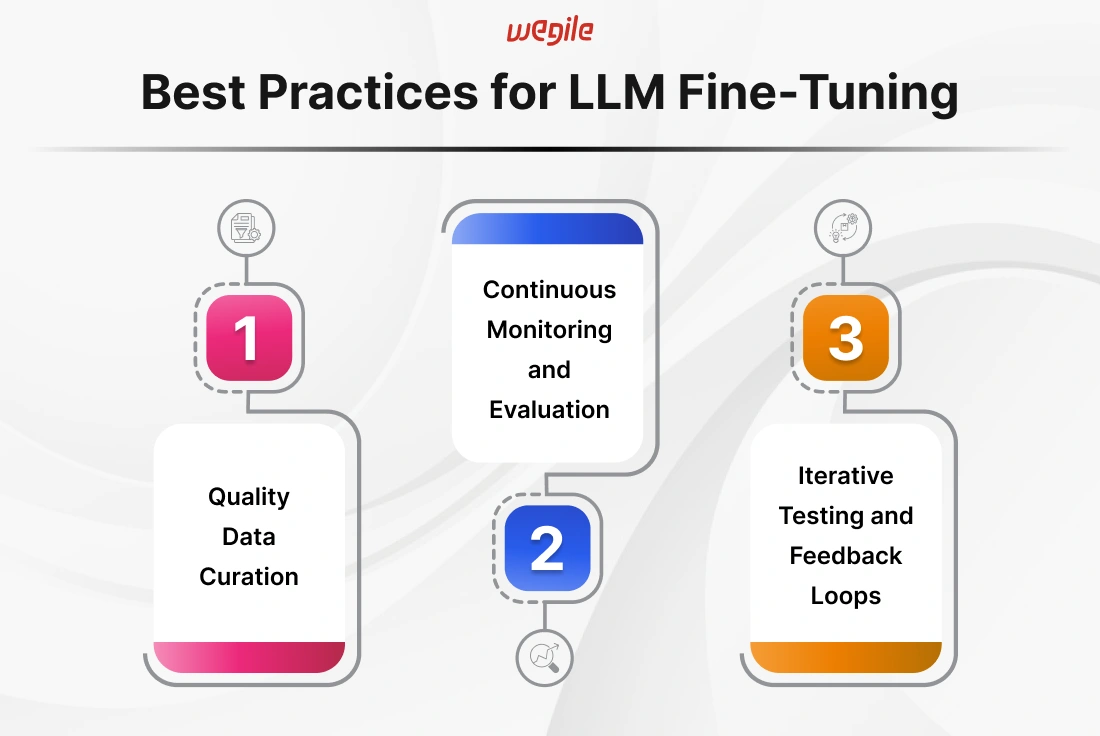

Fine-tuning an LLM isn’t just about tweaking a few settings and hitting “run.” You need to follow some best practices to truly unlock the model’s potential. This will ensure that the process is both effective and efficient. Let’s dive into how you can make the most out of your fine-tuning efforts.

High-quality data is the backbone of an effective tuning procedure. Just like a sponge, your LLM will soak in any information you give it. The outcomes will be better if the inputs are good.

Curate with Care: Start by gathering datasets that are different from being relevant but are diverse and representative of the task at hand. Do not feed the model irrelevant or low-quality data; doing so can cause it to produce biased or bad results. It’s similar to making a gourmet meal out of old ingredients. The result will be unsatisfactory regardless of the skill level of the cook.

Select Strategically: Choose datasets that are specific to the domain or task you’re fine-tuning for. For example, use up-to-date medical literature and patient data when you’re training a model to assist with medical diagnostics. This ensures that the model’s learning is both accurate and applicable.

Fine-tuning LLMs doesn’t have a “set it and forget it” approach. You need to monitor the model’s performance to make sure it is performing well.

Monitor Regularly: Implement ongoing monitoring systems to track the model’s performance over time. This involves making sure the model is accurate, relevant, and not showing any signs of drift. Thus, keep an eye on things on a regular basis to identify problems before they escalate.

Evaluate Thoroughly: Use a variety of evaluation metrics to assess the model’s performance. Don’t just rely on a single accuracy score. Consider other factors like precision, recall, and user satisfaction. This gives you a more holistic view of how well the model is doing and where it might need adjustments.

Your model still isn’t set in stone even after fine-tuning. It’s crucial to test, refine, and incorporate feedback continually.

Test Iteratively: Conduct regular testing to see how the model handles different inputs and scenarios. This helps you identify areas where the model might struggle or produce unexpected results. Think of it as a dress rehearsal before the big show. You want to iron out any kinks before going live.

Incorporate Feedback: It’s critical to build a system that lets users or domain experts provide feedback on how the model is performing. This assures you that the model is perfect and serves people’s real-world needs.

Fine-tuning LLMs is rewarding yet difficult. Understand potential problems and how to overcome them. This is essential to properly fine-tune LLM. Let’s examine some common issues and crucial concerns.

Data privacy and ethics should be at the forefront of your mind when fine-tuning LLMs. This is especially required for sensitive or proprietary applications.

Privacy Matters: Using sensitive or proprietary data can enhance the model’s relevance. However, it also raises significant privacy concerns. You need to ensure that any data used is handled with the utmost care. This includes anonymizing personal information and securing data storage. You also need to follow all relevant regulations like GDPR. Ignoring these aspects can lead to breaches of trust or legal repercussions.

Ethical Implications: There are ethical considerations beyond privacy in how the model is trained and used. For instance, training a model on biased data can lead to outputs that reinforce harmful stereotypes or unfair practices. Be mindful of the source and nature of your data. Strive to use diverse, representative datasets and consider the broader impact of the model’s decisions and actions.

There is a high monetary and computational expense attached with fine-tuning LLMs. Here, efficient resource management is crucial to avoid unnecessary expenses.

Computational Resources: Fine-tuning requires significant computational power. This often involves high-performance GPUs or cloud-based solutions. The costs can add up quickly.. This is especially for large models or extensive fine-tuning. Thus, it’s important to plan your resource allocation carefully. Consider whether the benefits of fine-tuning justify the costs. Further, explore ways to optimize resource usage, such as using smaller, more efficient models or limiting the scope of fine-tuning.

Cost Management: In addition to hardware, the time and expertise required for fine-tuning can also be costly. Training staff or hiring experts to manage the process can strain budgets. One way to keep expenditures in check during a fine-tuning project is to set clear targets and deadlines. Compare several platforms and tools to discover the best budget-friendly choices. Also, consider whether it would be more beneficial to use pre-fine-tuned models or outsource the work.

Fine-tuning LLMs is more than just an optimization approach; it's the key to unlocking the full potential of your AI projects. Tailoring these models to your specific needs will make certain that you can achieve more accurate results, enhance user experiences, and bring a higher level of intelligence to your applications. The benefits are clear: a smarter, more relevant AI that drives success no matter whether you're fine-tuning LLMs for customer engagement, content creation, or any other specialized task.

Looking to fine-tune your AI models or build a generative AI app from scratch? Wegile's generative AI app development services can help you create solutions that stand out. Let us partner with you to turn your AI ambitions into reality. Reach out to Wegile today, and let's start building together!

Browse Our Services

Browse Our Services